This problem is not unique to one platform but features across the broad base of social media apps used by children and young people.

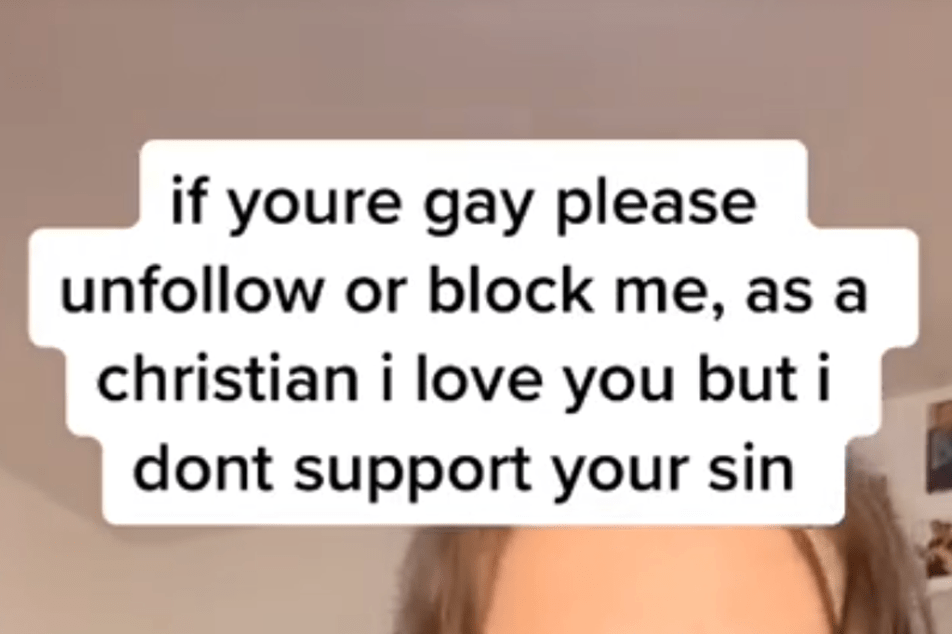

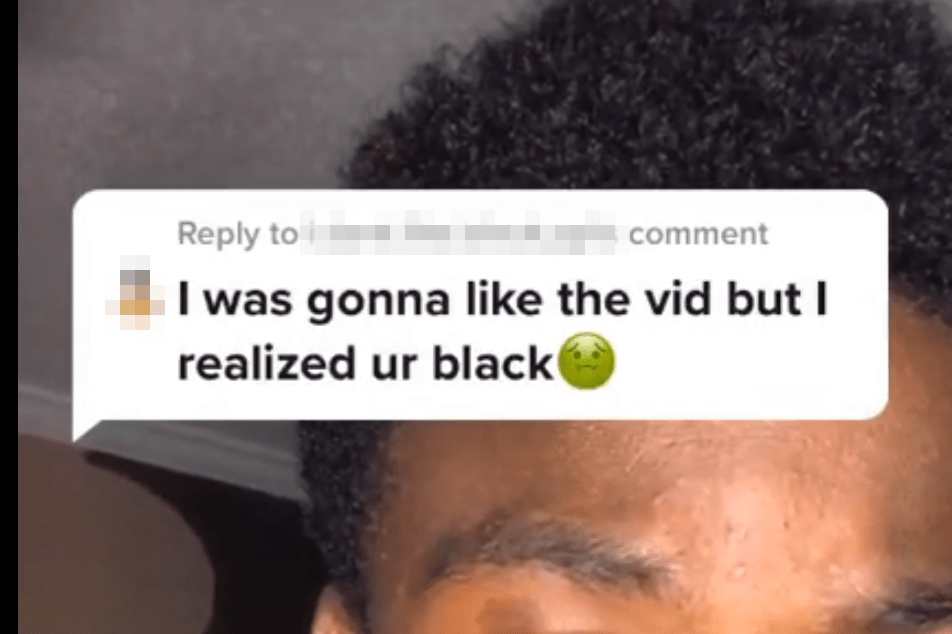

Hateful content is anything that promotes or celebrates hatred, violence or discrimination against a person or a group based on their identity. It can include images, videos, music and written text.

Why you should be concerned:

Talking to young people about hateful content online:

*For more information and tips check out the ‘Harmful Content’ page in ‘The Online World’ section of your app.

To report online material that promotes terrorism or extremism, you can use the Home Office Anonymous Reporting Portal

To report hate crime and online hate material you can use the True Vision Reporting Portal