Read the script below

Danielle: Hello and welcome to Safeguarding Soundbites, with me, Danielle.

Natalie: And me, Natalie. This is the podcast for finding out all the week’s top online safeguarding news and alerts.

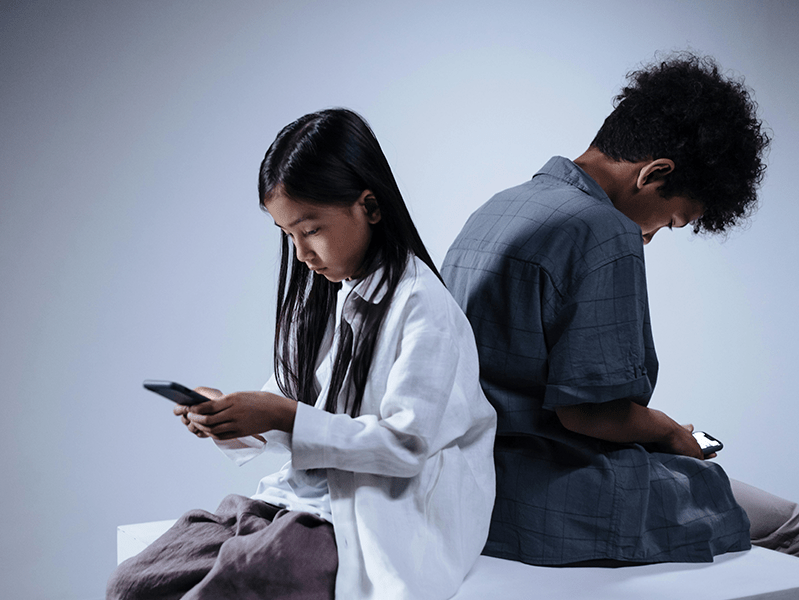

Danielle: This week, young people viewing child abuse imagery online, extremist content on Roblox and hearing one school’s approach to mobile phones in schools.

Natalie: So, let’s jump in! Danielle, will you start us off?

Danielle: Absolutely. So we’re starting this week with some Online Safety Bill news –

Natalie: – do you mean Online Safety Act?

Danielle: Ah, I keep forgetting it’s actually become law now so it’s officially called the Online Safety Act. But yes, Ofcom, who are in charge of essentially overseeing the implementation of the act, have released their draft age-check guidance for sites and apps that display or publish pornographic content. It’s part of a series of publications we can expect from Ofcom over the coming months and years in relation to the Act.

Natalie: So what does this particular guidance cover and what does it outline for those companies?

Danielle: It outlines legal duties about implementing age assurance that makes sure children are protected from pornographic content that’s displayed or published, on their service.

Natalie: Ah okay. And of course, protecting children and young people from accessing harmful content has always been one of the main purposes behind the Act.

Danielle: Absolutely! This will mean that any apps or sites that contain pornographic content will have to have some sort of age assurance process that will determine if the person trying to access the platform is a child, or not. And it has to be a highly effective method.

Natalie: Did they give any suggestions?

Danielle: They did, they range from credit card checks, photo ID matching, age checks through mobile-network operators and facial age estimation. They also gave a list of ineffective methods…

Natalie: Let me guess…a pop-up that asks users whether or not they’re 18?!

Danielle: That’s the one! Plus, any sort of disclaimers like ‘you can’t use this site if you’re under 18’.

Natalie: What a surprise!

But I think this news couldn’t come at a better time after it was revealed this week that thousands of young people across the UK are watching or sharing online child abuse material. A freedom of information request to police has disclosed shocking figures, including that 3,591 children were identified as watching or sharing child abuse images between January and October this year.

Danielle: That’s a really scary figure.

Natalie: It is. Now, we also have to point out two things about this. Firstly, this does include sexting – so young people sending each other sexual imagery of themselves. We refer to this as self-generated child abuse imagery. But this is still very much against the law for under 18s. Secondly, a senior practitioner for a child protection charity, reported they hear lots about bulk file downloads, where young people may be looking for sexual images of people their own age, but accidentally come across abusive material of younger children.

Danielle: That’s not what they were intending to download, they’re hidden within the folder, as it were. But on the other hand, while it may make sense to an 11 year old boy to look for girls his age in a sexualised nature, the type of content he will find will always be illegal and will depict the abuse of a child.

Natalie: And also, we know young people are being exposed to pornographic content that’s violent. In fact, one recent report from the Children’s Commissioner of England, warned that more than two-thirds of young adults have seen violent pornography before they turn 18.

Natalie: So we have a number of factors playing into this, it is a complex situation. For some young people, they’re not looking for this type of content. Or they think they’re going to see content of someone their age and not thinking that through.

Danielle: And there will also be cases in which a young person is exposed to this type of content without even searching for it, for example on a social media platform where the content has got through the filters and moderation in place.

Natalie: Education is really key here – young people need to understand the law and the implications. Creating, sharing and possessing child sexual abuse imagery is illegal – whether that’s of themselves, or another child.

Danielle: As well, Natalie, it’s so important that young people understand that yes, maybe they’re sending content consensually, but they can lose control of that photo or of those videos.

Natalie: Our listeners can find some advice on what to do if a child or young person in your care has lost control of an image – you can find this on your App or on our website Ineqe.com

Danielle: Moving on now to our next story which is about the online gaming platform Roblox. Police in Australia have warned that the platform is being used by extremists to recruit children. Some of the games are said to feature virtual worlds where players can act out an extremist ideological narrative, disseminate propaganda and generate funds online.

Natalie: Roblox is a sort of ‘create your world’ game, isn’t it?

Danielle: It is, this means users can create games and worlds for other users to come and play. It’s an extremely popular platform for children and young people, it has round 65 million users every day and half of those users are 12 or younger. This is not the first time the platform has been accused of harbouring this type of content – there’s been talk of recreations of terrorist attacks, Nazi concentration camps and even Islamic State conflict zones.

Natalie: And Roblox have previously spoken out about this issue – in fact, they told The New York Times earlier this year, that they recognise extremist groups are using a variety of tactics to get around the rules of the platforms, but they’re determined to stay one step ahead of them.

Danielle: There might be lots of concerned parents listening to this story, wondering if they should let their child play Roblox now.

Natalie: Which is understandable, of course. Do you have any advice Danielle?

Danielle: First of all, there are lots of games within Roblox. So don’t assume that, if your child is using the platform, that they have encountered any sort of extremism. Have a conversation with them – what games are they playing? Ask what their favourite game is. Ask if they’ve come across any games that upset them or worry them, and reassure them that they can chat to you or another adult they trust should they come across something upsetting.

Natalie: Another top tip is to play alongside them or even with them, making your own account to playing together. You can make this a fun activity, not an ‘I’m supervising you’ sort of thing! And of course, use the safety settings that are there – you can visit oursafetycentre.co.uk to find really clear guides on how to block, report and use all of the safety settings on Roblox.

Natalie: Moving on to the news that a school in Kent will be bringing in a new rule for pupils in the new year requiring them to lock their phones away in pouches. Pupils at the academy school in Ashford will put their phones in pouches during the school day – but they won’t be taken off them. The principal explained that he hoped the rule would limit disruptions in the school and aid in safeguarding.

Danielle: We know there are mixed opinions on mobile phones in schools. In fact, we recently sent out a survey to our apps to get your thoughts and opinions on the idea of phones being banned in schools.

Natalie: That’s right. And we’ve taken your opinions and put them into our brand new episode of the Online Safety Show – a monthly episode that rounds up online safeguarding news for children and young people.

Danielle: Our next episode has not yet been released but… Natalie, should we play our listeners a clip of Tyla discussing the results of the survey?

Natalie: Oh go on then…

Natalie: Interesting findings! To hear more about this topic, check out our Safeguarding Soundbites special all about mobile phone use in schools and of course go watch the Online Safety Show in your app.

Danielle: And finally, it’s time for our safeguarding success story of the week. This week, we’re highlighting the good work of some true digital heroes! Three primary schools from Wrexham in Wales have taken part in Digital Hero training sessions.

Natalie: Sounds exciting!

Danielle: It’s a really amazing initiative. Children and young people who feel confident using tech can use their skills to support others who may not be as confident to get online, including people living with dementia. It’s been proven that people living with dementia have a higher level of positive engagement interacting with children, so it’s a fantastic initiative. A big shout out to Borras Park Primary School, Victoria CP Primary School, and The Rofft Primary School for taking part.

Natalie: And all the other Digital Heroes in Wales, too! That’s all from us this week. Join us next time for more news and updates. Remember you can find more safeguarding advice on our range of safeguarding apps.

Danielle: And you can follow us on social media by searching for INEQE Safeguarding Group. Until next time

Both: Stay safe.

Join our Online Safeguarding Hub Newsletter Network

Members of our network receive weekly updates on the trends, risks and threats to children and young people online.

Pause, Think and Plan

Visit the Home Learning Hub!

The Home Learning Hub is our free library of resources to support parents and carers who are taking the time to help their children be safer online.